Web Scraping - Selenium

Scraping data is a blast. The thrill of obtaining a brand new dataset, one potentially no else has ever made before, is a high like no other.

Normally, my go to scraper tool is BeautifulSoup or Scrapy. However, sometimes we run across a site that dynamically populates data with additional javascript calls after loading the page. An example of a site like this is Kickstarter.

For my third project at Metis, we were assigned with developing a natural language processing project. We would use unsupervised learning tools such as clustering and topic modeling to find meaning in a corpus. I choose to look at Kickstarter pitches for my dataset. My goals were twofold:

- Develop topics for each pitch using LDA or K-Means

- Use topics and Term Frequency Inverse Document Frequency (TF-IDF) to develop a pitch funding outcome prediction model

Before doing so, I would have to actually obtain the dataset. This required scraping Kickstarter pitches to obtain a corpus. My first attempt at doing so used BeautifulSoup; however, I noticed the DOM nodes that were supposed to contain the data were coming up empty. What could be the cause of this?

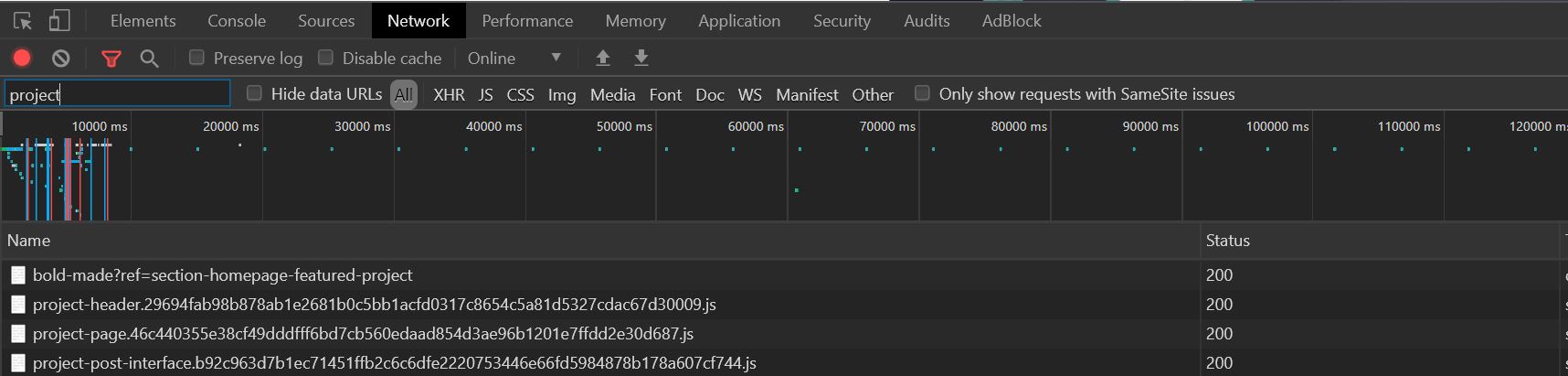

After further inspecting, it was clear the site was dynamically adding data using javascript after the initial html loaded. BeautifulSoup was only scraping the shell page!

To solve this, I switched to a headless browser, aka a web browser without a graphical user interface. A tool like this would make the request call using Chrome. They are normally used to test web apps but also serve as great web scraper in these instances. My tool of choice was Selenium. Luckily, I was able to port over all my DOM selectors into Selenium functions, so my BeautifulSoup work was not for naught. Below you'll find the result of all this work.

Enjoy!